2025-11-23 Synergies in the Robot + AI Industry

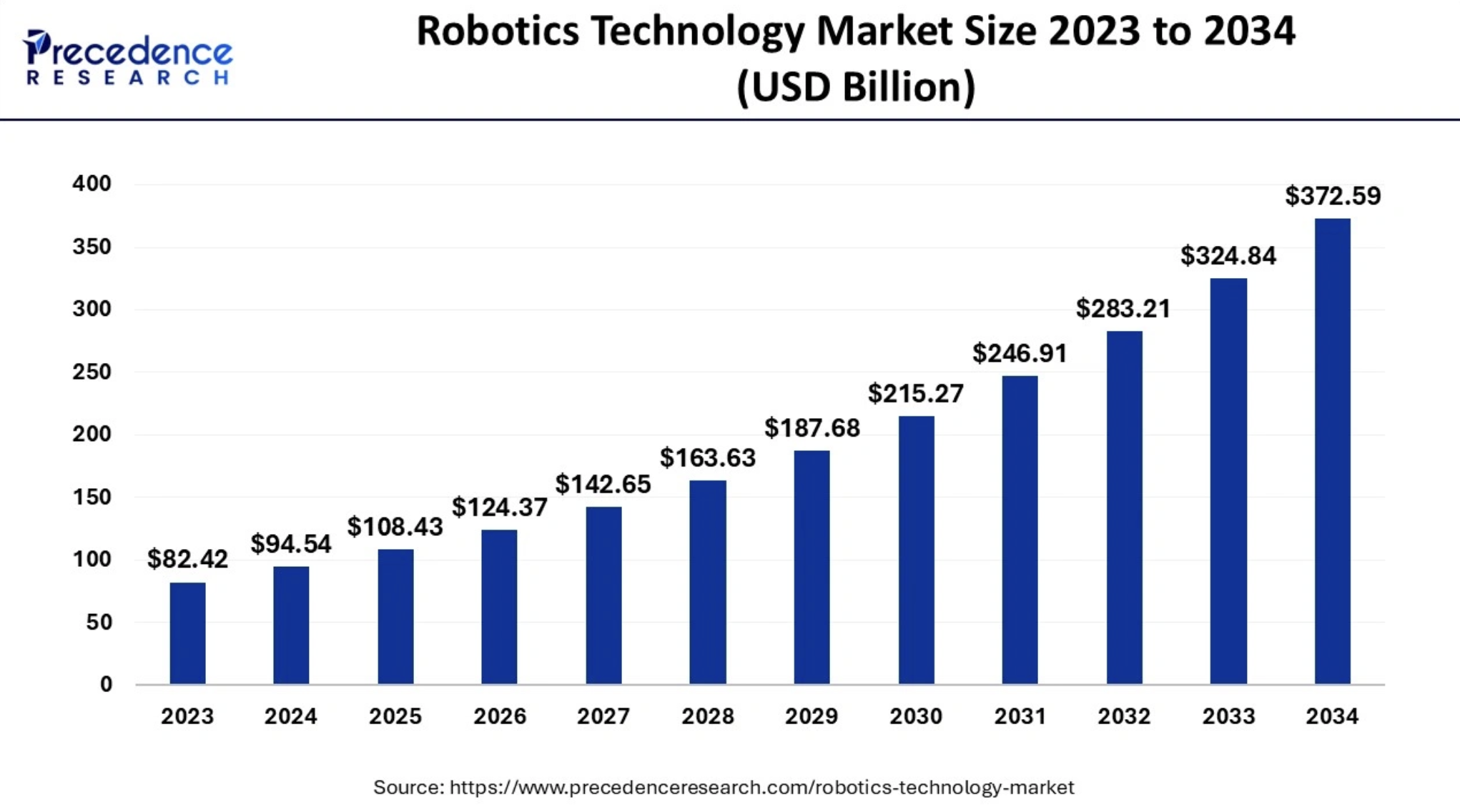

Robotics adoption is being driven by secular trends — labor shortages, automation in manufacturing, rise of AI, and demand for service robots (especially in healthcare and logistics).

Synergies in the Robot + AI Industry

- Physical AI / Generative + Analytical AI in Robots

- According to the International Federation of Robotics, one major trend is “Physical, Analytical, and Generative AI” in robotics.

- Physical AI means robots can “train” in virtual environments and learn from experience, not just follow pre-programmed rules.

- Generative AI is being used to enable more adaptable, “creative” robot behaviour — not just reacting to precise rules, but synthesizing actions in more flexible ways.

- Vision-Language-Action (VLA) Models for Robots

- Google DeepMind released Gemini Robotics, a vision-language-action model: it uses multimodal reasoning (vision + language) to let robots act more intelligently in the physical world.

- Gemini Robotics-ER (“Embodied Reasoning”) supports more complex reasoning about physical tasks (e.g., understanding how to pack efficiently).

- There are other VLA models too: e.g., Figure AI’s Helix, which decouples high-level perception from motor control to allow generalization + fast control.

- NVIDIA’s “GR00T N1” is another VLA model that combines a vision-language model for perception with a motion policy for action.

- Edge AI & On-Device Intelligence

- Robots are increasingly using edge computing to run AI models locally, which reduces reliance on cloud, lowers latency, and improves reliability.

- For example, DeepMind’s “Gemini Robotics On-Device” is optimized to run directly on robot hardware, enabling real-time decision-making.

- This edge AI is particularly important for real-time robotics tasks (e.g., navigation, manipulation) where delays are costly or dangerous.

- Physical AI Agents

- There’s newer academic work on “Physical AI Agents”: systems that merge cognitive intelligence (reasoning, planning) with physical actuation.

- Their architecture typically has three core modules: perception (sensing), cognition (decision-making), and actuation (physical execution).

- They also propose “Ph-RAG” (Physical Retrieval Augmented Generation) — connecting embodied intelligence with LLM-style memory/knowledge for real-time decision making.

- This is a compelling direction: robots that are not just reactive but “thinking” in a human-like way while acting in the real world.

- Human-Centered AI (HCAI) and Trustworthy Robotics

- As robots become more autonomous, there’s a growing focus on human-centered AI in robotics.

- This research emphasizes balancing robot autonomy with human control, ensuring trust, safety, and ethical behavior.

- Autonomy is important, but so is ensuring robots’ decisions are understandable, safe, and aligned with human intentions.

- Neurosymbolic AI for Industrial Robotics

- There’s work (e.g., “BANSAI”) that proposes neuro-symbolic programming to bridge traditional robot programming and data-driven learning.

- The idea: combine symbolic reasoning (which is robust, interpretable) with neural networks (which are flexible, learn from data) to make industrial robots more intelligent and easier to program.

- Applications & Deployment: Warehouses, Humanoids, Cobots

- In warehousing, AI-powered robots (AMRs, mobile manipulators) are increasingly common: learning navigation, object recognition, path planning.

- Cobots (collaborative robots) are growing: robots that safely work side-by-side with humans, empowered by AI to understand their environment and react appropriately.

- Humanoid robots: More humanoids are being developed with AI “brains” for tasks like logistics, elder care, or service. For instance, Apptronik raised $350M to scale its humanoid robot, Apollo.

- Partnerships: e.g., NVIDIA is working with Fujitsu on AI robots, building infrastructure for AI-powered robotics.

- There’s also a national / industry push in Korea: the K-Humanoid Alliance was launched in 2025, combining robotics and AI research from major Korean companies and universities.

- Improved Dexterity & Real-World Adaptation

- Boston Dynamics reportedly enhanced its Atlas robot’s dexterity via deep learning / reinforcement learning, enabling finer motor skills.

- AI helps robots adapt to dynamic environments — not just in a controlled factory, but in more unpredictable, human-centric spaces.

- Safety and reasoning are getting better: e.g., DeepMind’s models assess action safety before execution.

- Networked Robotics + Semantic Communications

- A recent academic paper proposes combining generative AI agents with semantic communications in robot networks.

- Semantic communication focuses on sending meaningful information (not just raw data), allowing more efficient coordination among robots.

- This can lead to lower latency, less bandwidth usage, and better collaboration in multi-robot systems.

- Scaling via “Robotics as a Service” (RaaS)

- The business model is shifting: more companies are adopting Robotics as a Service, leasing AI-robots rather than buying.

- This makes advanced robotics more accessible to smaller companies, especially when paired with AI that reduces manual programming and maintenance.

Leave a comment